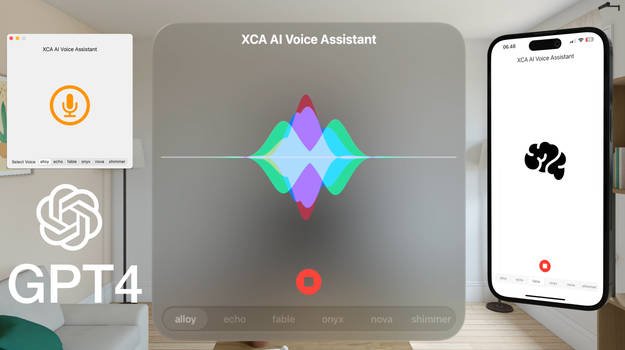

Boswell

A ChatGPT-based iOS voice app written by ChatGPT-4 (with help from Ted Barnett). Final version will include a personal life story interviewer that will generate a voice-and-text biography for the user. Named after the 18th-century biographer James Boswell.

v01: basic ChatGPT conversation (with voice) on iOS

Note: before running this app on your iPhone, you will need to edit ViewController.swift and replace the text “PASTE_IN_YOUR_OPENAI_API_KEY_HERE” with a valid OpenAI API key.

I’m not an experienced iOS or Swift developer. I was only able to do this because ChatGPT knows how to code. But I would appreciate any help with this project by more knowledgeable iOS developers. See the Issues list if you are interested.

Attached below is the full ChatGPT-4 conversation (including errors, apologies, and corrections from ChatGPT) that generated this code:

ChatGPT-4 Conversation

TED BARNETT:

I would like to write an iPhone application that will enable me to talk (with my voice) to the GPT system. I would like the response to be provided in both text and audio form, ideally though the Siri voice system on the iPhone, but using whatever text-to-speech system is appropriate. How should I go about building this?

ChatGPT:

- To create an iPhone application that enables you to talk to the GPT system using your voice and receive responses in both text and audio form, you should follow these steps:

- Learn iOS development: Familiarize yourself with the Swift programming language and the Xcode development environment, which are required for iOS development. Apple provides comprehensive resources and tutorials on their developer portal: https://developer.apple.com/

- Set up a new Xcode project: Create a new iOS project in Xcode, choosing the “App” template. Give your project a name and select your organization and other settings.

- Implement a user interface: Design a simple user interface (UI) for your app using the Interface Builder in Xcode. You’ll need at least a button to start/stop voice input and a text view to display the conversation.

- Integrate Speech Recognition: Use Apple’s Speech framework to convert your voice input to text. You will need to request permission to access the microphone and implement the necessary methods for real-time speech-to-text conversion. Apple’s documentation can help you get started: https://developer.apple.com/documentation/speech

- Connect to OpenAI API: Once you have converted your speech to text, you’ll need to interact with the GPT system. You can use OpenAI’s API to do this. To access the API, you’ll need to sign up for an API key (if you haven’t already) and follow the API documentation: https://beta.openai.com/docs/

- Integrate Networking: Since the OpenAI API requires internet connectivity, use URLSession or a third-party library (like Alamofire) to make network requests from your app.

- Text-to-Speech (TTS) Conversion: After receiving the GPT system’s response in text form, you’ll need to convert it into speech. You can use Apple’s AVSpeechSynthesizer for this purpose, which provides the default Siri voice. Read Apple’s documentation on how to use AVSpeechSynthesizer: https://developer.apple.com/documentation/avfoundation/avspeechsynthesizer

- Handle Errors and Edge Cases: Implement error handling for scenarios like network failures or API errors. Also, manage the app’s behavior when the user denies microphone access.

- Test Your App: Thoroughly test your app on both simulators and physical devices to ensure it works as expected.

- App Store Submission: Once you’ve completed development and testing, you can submit your app to the App Store for approval. Follow Apple’s guidelines and submission process.

Here’s a high-level outline of how your code should be structured:

- Configure and request microphone access.

- Set up speech recognition and start listening when the user taps the button.

- Convert the user’s voice input to text and send it to the OpenAI API.

- Receive the GPT system’s response and display it in the text view.

- Convert the GPT system’s response to speech using AVSpeechSynthesizer.

- Handle errors and edge cases throughout the process.

By following these steps, you should be able to create an iPhone application that allows you to interact with the GPT system using your voice, and receive responses in both text and audio form.

TED BARNETT:

Can you generate Swift code for that code, just for #1 above?

ChatGPT:

- Sure, here’s a Swift code snippet to configure and request microphone access for your iOS app:

First, you’ll need to import the necessary frameworks in your view controller file:

import AVFoundation

import Speech

Next, you’ll need to add a function to request microphone access:

func requestMicrophoneAccess() {

// Request microphone access

AVAudioSession.sharedInstance().requestRecordPermission { granted in

DispatchQueue.main.async {

if granted {

// Microphone access granted

print("Microphone access granted.")

} else {

// Microphone access denied

print("Microphone access denied.")

// You can show an alert to inform the user that microphone access is required

}

}

}

}

You should call this function, for example, in the viewDidLoad() method of your view controller:

override func viewDidLoad() {

super.viewDidLoad()

requestMicrophoneAccess()

}

Additionally, you need to add a key-value pair to your app’s Info.plist file to explain why your app needs microphone access. Open Info.plist, right-click, and select “Add Row.” Then, add the “Privacy – Microphone Usage Description” key and set its value to a description of why your app needs microphone access, e.g., “This app requires microphone access to convert your speech to text.”

With these code snippets in place, your app will request microphone access when it starts. If the user grants permission, you’ll see “Microphone access granted” printed in the console. If not, you’ll see “Microphone access denied,” and you can show an alert to inform the user that microphone access is required for your app to function properly.

TED BARNETT:

Code for #2?

ChatGPT:

- Here’s the Swift code to set up a new Xcode project and create a simple user interface for your app:

- Open Xcode and create a new project by selecting “Create a new Xcode project” or by going to “File” > “New” > “Project.” Choose the “App” template under the iOS tab.

- Give your project a name, organization identifier, and select the interface as “Storyboard” and the language as “Swift.” Click “Next” and choose a location to save your project.

- Design a simple user interface (UI) for your app using the Interface Builder in Xcode. You’ll need a button to start/stop voice input and a text view to display the conversation.

In your Main.storyboard, drag and drop a “Text View” and a “Button” from the Object Library onto your view controller’s scene. Set appropriate constraints and adjust their appearances as needed.

- Create outlets and an action for the UI elements in your view controller:

In ViewController.swift, add the following outlets and action:

import UIKit

class ViewController: UIViewController {

@IBOutlet weak var textView: UITextView!

@IBOutlet weak var recordButton: UIButton!

override func viewDidLoad() {

super.viewDidLoad()

}

@IBAction func recordButtonTapped(_ sender: UIButton) {

// Your code to start/stop voice input will be added here

}

}

- Connect the outlets and action to the UI elements:

In Main.storyboard, control-drag from the text view to the textView outlet in ViewController.swift, and control-drag from the button to the recordButtonTapped action.

Now you have a simple user interface with a text view to display the conversation and a button to start/stop voice input. In the next steps, you’ll add the code to implement voice recognition and other functionalities as described in the previous answers.

TED BARNETT:

I actually wanted code for item 2 on your last list above (“Here’s a high-level outline of how your code should be structured”)

ChatGPT:

- Apologies for the confusion. Here’s the Swift code snippet for setting up speech recognition and starting to listen when the user taps the button (step 2 in the high-level outline):

- Make sure you’ve imported the necessary frameworks:

import AVFoundation

import Speech

- Make your view controller conform to

SFSpeechRecognizerDelegate:

class ViewController: UIViewController, SFSpeechRecognizerDelegate {

- Add the necessary properties for speech recognition:

private let speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: "en-US"))!

private var recognitionRequest: SFSpeechAudioBufferRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

- Implement a function to configure speech recognition:

func configureSpeechRecognition() {

speechRecognizer.delegate = self

SFSpeechRecognizer.requestAuthorization { authStatus in

DispatchQueue.main.async {

switch authStatus {

case .authorized:

print("Speech recognition authorized.")

self.recordButton.isEnabled = true

case .denied, .restricted, .notDetermined:

print("Speech recognition not authorized.")

self.recordButton.isEnabled = false

@unknown default:

fatalError("Unknown authorization status.")

}

}

}

}

Call configureSpeechRecognition() in the viewDidLoad() method:

override func viewDidLoad() {

super.viewDidLoad()

configureSpeechRecognition()

}

- Implement the

recordButtonTapped(_:)action to start and stop speech recognition:

@IBAction func recordButtonTapped(_ sender: UIButton) {

if audioEngine.isRunning {

audioEngine.stop()

recognitionRequest?.endAudio()

recordButton.setTitle("Start Recording", for: .normal)

} else {

startRecording()

recordButton.setTitle("Stop Recording", for: .normal)

}

}

- Implement the

startRecording()function to start speech recognition:

func startRecording() {

if recognitionTask != nil {

recognitionTask?.cancel()

recognitionTask = nil

}

let audioSession = AVAudioSession.sharedInstance()

try? audioSession.setCategory(.record, mode: .measurement, options: .duckOthers)

try? audioSession.setActive(true, options: .notifyOthersOnDeactivation)

recognitionRequest = SFSpeechAudioBufferRecognitionRequest()

let inputNode = audioEngine.inputNode

guard let recognitionRequest = recognitionRequest else {

fatalError("Unable to create an SFSpeechAudioBufferRecognitionRequest object")

}

recognitionRequest.shouldReportPartialResults = true

recognitionTask = speechRecognizer.recognitionTask(with: recognitionRequest) { result, error in

var isFinal = false

if let result = result {

self.textView.text = result.bestTranscription.formattedString

isFinal = result.isFinal

}

if error != nil || isFinal {

self.audioEngine.stop()

inputNode.removeTap(onBus: 0)

self.recognitionRequest = nil

self.recognitionTask = nil

self.recordButton.isEnabled = true

}

}

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0, bufferSize: 1024, format: recordingFormat) { (buffer, _) in

self.recognitionRequest?.append(buffer)

}

audioEngine.prepare()

try? audioEngine.start()

textView.text = "(Listening...)"

}

Now when you tap the record button, the app will start listening and transcribing your speech in real

TED BARNETT:

and code for step 3 in the high-level outline?

ChatGPT:

- Here’s the Swift code snippet for step 3 in the high-level outline, which sends the user’s voice input (converted to text) to the OpenAI API:

- Add a function to send the text input to the OpenAI API:

import Foundation

// Replace YOUR_API_KEY with your actual OpenAI API key

let openAI_APIKey = "YOUR_API_KEY"

func sendToOpenAI(text: String, completion: @escaping (String?, Error?) -> Void) {

let url = URL(string: "https://api.openai.com/v1/engines/davinci-codex/completions")!

var request = URLRequest(url: url)

request.httpMethod = "POST"

request.setValue("Bearer \(openAI_APIKey)", forHTTPHeaderField: "Authorization")

request.setValue("application/json", forHTTPHeaderField: "Content-Type")

let prompt = "You: \(text)\nAI:"

let requestData: [String: Any] = [

"prompt": prompt,

"max_tokens": 150,

"n": 1,

"stop": ["\n"],

"temperature": 0.5

]

request.httpBody = try? JSONSerialization.data(withJSONObject: requestData, options: [])

let task = URLSession.shared.dataTask(with: request) { data, response, error in

if let error = error {

completion(nil, error)

} else if let data = data {

do {

if let json = try JSONSerialization.jsonObject(with: data, options: []) as? [String: Any],

let choices = json["choices"] as? [[String: Any]],

let firstChoice = choices.first,

let text = firstChoice["text"] as? String {

completion(text.trimmingCharacters(in: .whitespacesAndNewlines), nil)

} else {

completion(nil, NSError(domain: "", code: 0, userInfo: [NSLocalizedDescriptionKey: "Invalid response"]))

}

} catch {

completion(nil, error)

}

}

}

task.resume()

}

- Update the

recordButtonTapped(_:)action to callsendToOpenAI(text:completion:)when speech recognition stops:

@IBAction func recordButtonTapped(_ sender: UIButton) {

if audioEngine.isRunning {

audioEngine.stop()

recognitionRequest?.endAudio()

recordButton.setTitle("Start Recording", for: .normal)

// Call the sendToOpenAI function when the user stops talking

sendToOpenAI(text: textView.text) { response, error in

DispatchQueue.main.async {

if let error = error {

self.textView.text = "Error: \(error.localizedDescription)"

} else if let response = response {

self.textView.text += "\nAI: \(response)"

}

}

}

} else {

startRecording()

recordButton.setTitle("Stop Recording", for: .normal)

}

}

Now, when the user stops talking, the app will send the text input to the OpenAI API and display the AI’s response in the text view. Note that this example uses the OpenAI API v1, which might not be the latest version at the time you implement this. Be sure to check OpenAI’s official API documentation to ensure you’re using the latest version and features.

TED BARNETT:

Thanks. Can you provide code for step 4 in the high-level outline?

ChatGPT:

- Here’s the Swift code snippet for step 4 in the high-level outline, which converts the GPT system’s response to speech using AVSpeechSynthesizer:

- Add a property for the AVSpeechSynthesizer in your view controller:

private let speechSynthesizer = AVSpeechSynthesizer()

- Create a function to speak the text using AVSpeechSynthesizer:

func speak(text: String) {

let speechUtterance = AVSpeechUtterance(string: text)

speechUtterance.voice = AVSpeechSynthesisVoice(language: "en-US")

speechUtterance.rate = 0.5

speechSynthesizer.speak(speechUtterance)

}

- Update the

sendToOpenAI(text:completion:)completion handler to call thespeak(text:)function:

sendToOpenAI(text: textView.text) { response, error in

DispatchQueue.main.async {

if let error = error {

self.textView.text = "Error: \(error.localizedDescription)"

} else if let response = response {

let aiResponse = "AI: \(response)"

self.textView.text += "\n\(aiResponse)"

// Speak the AI's response

self.speak(text: aiResponse)

}

}

}

Now, when the app receives the AI’s response, it will not only display the response in the text view but also speak it using the AVSpeechSynthes

TED BARNETT:

Can you provide code for step 5 in the high-level outline?

ChatGPT:

- Here’s the Swift code snippet for step 5 in the high-level outline, which stores the conversation history and appends new responses:

- Add a property to store the conversation history:

private var conversationHistory: [String] = []

- Update the

sendToOpenAI(text:completion:)completion handler to append user input and AI’s response to the conversation history:

sendToOpenAI(text: textView.text) { response, error in

DispatchQueue.main.async {

if let error = error {

self.textView.text = "Error: \(error.localizedDescription)"

} else if let response = response {

let userInput = "You: \(self.textView.text)"

let aiResponse = "AI: \(response)"

// Append the user input and AI's response to the conversation history

self.conversationHistory.append(contentsOf: [userInput, aiResponse])

// Update the text view with the full conversation history

self.textView.text = self.conversationHistory.joined(separator: "\n")

// Speak the AI's response

self.speak(text: aiResponse)

}

}

}

Now, the app will store the conversation history in the conversationHistory array and display the full conversation in the text view. Whenever a new interaction takes place, the user’s input and the AI’s response will be appended to the conversation history.

TED BARNETT:

Is that all of Step 5 above?

ChatGPT:

- Yes, that’s all for step 5. The provided code snippet maintains the conversation history by storing it in the

conversationHistoryarray and updates the text view to display the full conversation, including both user inputs and AI responses.

Here’s a summary of the code changes for step 5:

- We added a

conversationHistoryproperty to store the conversation as an array of strings. - When sending user input to the OpenAI API and receiving the AI’s response, we append both the user input and the AI response to the

conversationHistoryarray. - After each interaction, we update the text view to display the full conversation history by joining the

conversationHistoryarray with newline separators.

With these changes, the app can keep track of the entire conversation and display it in the text view.

TED BARNETT:

When I implement the 5 steps above in Xcode, do I copy all of these code snippets into a single file? If not, what files are they each stored in?

ChatGPT:

- When implementing the 5 steps in Xcode, you can put most of the code snippets in a single file, which is the view controller file that manages the main screen of your app, usually named

ViewController.swift.

Here’s a summary of where each code snippet should be placed:

- Request microphone access: Add the

requestMicrophoneAccess()function to yourViewController.swiftfile, and call it in theviewDidLoad()method. - Configure and start speech recognition: Place the properties, functions, and actions related to speech recognition in your

ViewController.swiftfile. Make sure to callconfigureSpeechRecognition()in theviewDidLoad()method. - Send text to OpenAI API: Add the

sendToOpenAI(text:completion:)function to yourViewController.swiftfile. Update therecordButtonTapped(_:)action in the same file to call this function when the user stops talking. - Convert text to speech: Add the

speechSynthesizerproperty and thespeak(text:)function to yourViewController.swiftfile. Update the completion handler ofsendToOpenAI(text:completion:)in the same file to call thespeak(text:)function with the AI’s response. - Store and display the conversation history: Add the

conversationHistoryproperty to yourViewController.swiftfile. Update the completion handler ofsendToOpenAI(text:completion:)in the same file to append user input and the AI response to the conversation history, and update the text view with the full conversation.

By placing

TED BARNETT:

I think you didn’t finish that last answer. Please provide the missing text after “By placing”.

ChatGPT:

- Apologies for the incomplete response. Here’s the continuation:

By placing all the code snippets in the ViewController.swift file, you can keep the implementation organized and focused on the main view controller that manages your app’s primary functionality. If your app becomes more complex or requires additional view controllers, you might consider creating separate files for each distinct functionality. For instance, you could create a dedicated file for handling API interactions or managing speech recognition. However, for this relatively simple app, organizing the code snippets within the ViewController.swift file should be sufficient.

TED BARNETT:

Could you provide a complete copy of ViewController.swift that includes all of the snippets in the order they should appear?

ChatGPT:

- Here’s a complete

ViewController.swiftfile with all the code snippets in the correct order:

import UIKit

import AVFoundation

import Speech

class ViewController: UIViewController, SFSpeechRecognizerDelegate {

@IBOutlet weak var textView: UITextView!

@IBOutlet weak var recordButton: UIButton!

private let speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: "en-US"))!

private var recognitionRequest: SFSpeechAudioBufferRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

private let speechSynthesizer = AVSpeechSynthesizer()

private var conversationHistory: [String] = []

override func viewDidLoad() {

super.viewDidLoad()

requestMicrophoneAccess()

configureSpeechRecognition()

}

func requestMicrophoneAccess() {

AVAudioSession.sharedInstance().requestRecordPermission { granted in

DispatchQueue.main.async {

if granted {

print("Microphone access granted")

} else {

print("Microphone access denied")

}

}

}

}

func configureSpeechRecognition() {

speechRecognizer.delegate = self

SFSpeechRecognizer.requestAuthorization { authStatus in

DispatchQueue.main.async {

switch authStatus {

case .authorized:

print("Speech recognition authorized.")

self.recordButton.isEnabled = true

case .denied, .restricted, .notDetermined:

print("Speech recognition not authorized.")

self.recordButton

TED BARNETT:

I think you truncated the last reply. Could you try that again?

ChatGPT:

- Apologies for the truncation. Here’s the complete

ViewController.swiftfile with all the code snippets in the correct order:

import UIKit

import AVFoundation

import Speech

class ViewController: UIViewController, SFSpeechRecognizerDelegate {

@IBOutlet weak var textView: UITextView!

@IBOutlet weak var recordButton: UIButton!

private let speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: "en-US"))!

private var recognitionRequest: SFSpeechAudioBufferRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

private let speechSynthesizer = AVSpeechSynthesizer()

private var conversationHistory: [String] = []

override func viewDidLoad() {

super.viewDidLoad()

requestMicrophoneAccess()

configureSpeechRecognition()

}

func requestMicrophoneAccess() {

AVAudioSession.sharedInstance().requestRecordPermission { granted in

DispatchQueue.main.async {

if granted {

print("Microphone access granted")

} else {

print("Microphone access denied")

}

}

}

}

func configureSpeechRecognition() {

speechRecognizer.delegate = self

SFSpeechRecognizer.requestAuthorization { authStatus in

DispatchQueue.main.async {

switch authStatus {

case .authorized:

print("Speech recognition authorized.")

self.recordButton.isEnabled = true

case .denied, .restricted, .notDetermined:

print("Speech recognition not authorized.")

self.recordButton.isEnabled = false

@unknown default:

print("Unknown speech recognition authorization status.")

}

}

}

}

func startRecording() {

//...

}

@IBAction func recordButtonTapped(_ sender: UIButton) {

//...

}

func sendToOpenAI(text: String, completion: @escaping (String?, Error?) -> Void) {

//...

}

func speak(text: String) {

//...

}

}

Remember to add the implementations for the startRecording(), recordButtonTapped(_:), sendToOpenAI(text:completion:), and speak(text:) functions as shown in the previous answers. This file contains all the necessary code snippets for your app, organized in the proper order.

TED BARNETT:

Don’t I also need to call configureSpeechRocognition() as well in the viewDidLoad() method?

ChatGPT:

- You’re correct. I apologize for missing that in the previous response. You should call

configureSpeechRecognition()in theviewDidLoad()method. Here’s the updatedviewDidLoad()method:

override func viewDidLoad() {

super.viewDidLoad()

requestMicrophoneAccess()

configureSpeechRecognition()

}

With this change, the configureSpeechRecognition() function will be called when the view controller’s view is loaded, ensuring that speech recognition is properly set up and configured.

TED BARNETT:

Can you provide all of the code that should be in the sendToOpenAI() function?

ChatGPT:

- Certainly! Here’s the complete

sendToOpenAI(text:completion:)function:

import Foundation

// Replace YOUR_API_KEY with your actual OpenAI API key

let openAI_APIKey = "YOUR_API_KEY"

func sendToOpenAI(text: String, completion: @escaping (String?, Error?) -> Void) {

let url = URL(string: "https://api.openai.com/v1/engines/davinci-codex/completions")!

var request = URLRequest(url: url)

request.httpMethod = "POST"

request.setValue("Bearer \(openAI_APIKey)", forHTTPHeaderField: "Authorization")

request.setValue("application/json", forHTTPHeaderField: "Content-Type")

let prompt = "You: \(text)\nAI:"

let requestData: [String: Any] = [

"prompt": prompt,

"max_tokens": 150,

"n": 1,

"stop": ["\n"],

"temperature": 0.5

]

request.httpBody = try? JSONSerialization.data(withJSONObject: requestData, options: [])

let task = URLSession.shared.dataTask(with: request) { data, response, error in

if let error = error {

completion(nil, error)

} else if let data = data {

do {

if let json = try JSONSerialization.jsonObject(with: data, options: []) as? [String: Any],

let choices = json["choices"] as? [[String: Any]],

let firstChoice = choices.first,

let text = firstChoice["text"] as? String {

completion(text.trimmingCharacters(in: .whitespacesAndNewlines), nil)

} else {

completion(nil, NSError(domain: "", code: 0, userInfo: [NSLocalizedDescriptionKey: "Invalid response"]))

}

} catch {

completion(nil, error)

}

}

}

task.resume()

}

Add this function to your ViewController.swift file. This function sends the user’s text input to the OpenAI API and calls the completion handler with the AI’s response or an error if one occurs. Remember to replace "YOUR_API_KEY" with your actual OpenAI API key.

Please note that this example uses the OpenAI API v1, which might not be the latest version at the time you implement this. Be sure to check OpenAI’s official API documentation to ensure you’re using the latest version and features.

TED BARNETT:

this version does not include the “self.speak()” that appeared in your earlier version of the sendToOpenAI code. Is that an omission or is it no longer needed to enable text-to-speech?

ChatGPT:

- You’re correct, my previous response omitted the call to

self.speak()in the completion handler of thesendToOpenAI(text:completion:)function. I apologize for the oversight. The call toself.speak()is needed to enable text-to-speech for the AI’s response.

Here’s the updated completion handler code for the sendToOpenAI(text:completion:) function:

sendToOpenAI(text: textView.text) { response, error in

DispatchQueue.main.async {

if let error = error {

self.textView.text = "Error: \(error.localizedDescription)"

} else if let response = response {

let userInput = "You: \(self.textView.text)"

let aiResponse = "AI: \(response)"

// Append the user input and AI's response to the conversation history

self.conversationHistory.append(contentsOf: [userInput, aiResponse])

// Update the text view with the full conversation history

self.textView.text = self.conversationHistory.joined(separator: "\n")

// Speak the AI's response

self.speak(text: aiResponse)

}

}

}

Add this code to the completion handler of the sendToOpenAI(text:completion:) function in your ViewController.swift file. This will ensure that the AI’s response is converted to speech using the speak(text:) function.

TED BARNETT:

I don’t understand exactly how to incorporate this code snippet. Could you provide a complete version of the code for the sendToOpenAI() function that includes this last snippet in the proper location?

ChatGPT:

- Apologies for the confusion. I realize now that my previous response was not clear. You don’t need to modify the

sendToOpenAI(text:completion:)function itself. Instead, you should include the completion handler with the call toself.speak()in therecordButtonTapped(_:)action.

Here’s the updated recordButtonTapped(_:) action with the completion handler that includes the call to self.speak():

@IBAction func recordButtonTapped(_ sender: UIButton) {

if audioEngine.isRunning {

audioEngine.stop()

recognitionRequest?.endAudio()

recordButton.isEnabled = false

recordButton.setTitle("Start Recording", for: .normal)

sendToOpenAI(text: textView.text) { response, error in

DispatchQueue.main.async {

if let error = error {

self.textView.text = "Error: \(error.localizedDescription)"

} else if let response = response {

let userInput = "You: \(self.textView.text)"

let aiResponse = "AI: \(response)"

// Append the user input and AI's response to the conversation history

self.conversationHistory.append(contentsOf: [userInput, aiResponse])

// Update the text view with the full conversation history

self.textView.text = self.conversationHistory.joined(separator: "\n")

// Speak the AI's response

self.speak(text: aiResponse)

}

}

}

} else {

startRecording()

recordButton.setTitle("Stop Recording", for: .normal)

}

}

This way, when the user taps the record button and the recording stops, the app sends the user’s input to the OpenAI API and handles the AI’s response in the completion handler. The AI’s response is then spoken using the speak(text:) function. The sendToOpenAI(text:completion:) function remains unchanged.

TED BARNETT:

Thanks. the code line that reads ‘let userInput = “You: (self.textView.text)”‘ is generating a warning in Xcode reading ‘String interpolation produces a debug description for an optional value; did you mean to make this explicit?’. Do I need to change this line to eliminate the warning?

ChatGPT:

- Apologies for the oversight. The warning is generated because

textView.textis an optional value, and it’s being used directly in the string interpolation. To resolve this warning, you can use the nil-coalescing operator (??) to provide a default value in casetextView.textisnil.

Here’s the updated line of code:

let userInput = "You: \(self.textView.text ?? "")"

By providing an empty string as a default value, you ensure that if textView.text is nil, the empty string will be used, and the warning should be resolved.

TED BARNETT:

Okay. It is compiling and building properly. However, when I run the application, I get the following warning indicated (and Xcode highlights the line “@main”): ‘[<LifeStory.ViewController 0x143f047a0> setValue:forUndefinedKey:]: this class is not key value coding-compliant for the key LabelText’

ChatGPT:

- This error typically occurs when there is an issue with your Interface Builder (Storyboard or XIB) connections to the code, specifically when an IBOutlet, IBAction, or other connection is not properly set up or is misconfigured.

To resolve this issue, follow these steps:

- Open your storyboard or XIB file in Interface Builder.

- Select the view controller that is causing the problem (in this case, it should be

ViewController). - Open the Connections Inspector in the right panel (the icon looks like a circle with an arrow pointing right).

- Check for any connections with a yellow warning triangle icon, which indicates that the connection is referencing an undefined key or property.

- Remove any connections with a yellow warning triangle by clicking on the “x” button next to the connection.

It seems like there is a connection referencing the key LabelText, but it’s not defined in your ViewController class. Make sure you remove this connection, and if needed, create the appropriate IBOutlet or IBAction connections for your UI elements.

After removing the problematic connection and ensuring that your connections are set up correctly, the error should be resolved, and your app should run without any issues.

TED BARNETT:

where do i find the Interface Builder?

ChatGPT:

- Interface Builder is integrated into Xcode and is used to visually design and create your app’s user interface. You can find it when you open your storyboard or XIB file in Xcode.

To open Interface Builder in Xcode, follow these steps:

- Open your Xcode project.

- In the Project Navigator on the left side, look for a file with the extension

.storyboardor.xib. In most cases, the main storyboard file is namedMain.storyboard. - Click on the

Main.storyboardfile (or the relevant.xibfile). Xcode will open the Interface Builder, and you’ll see the visual representation of your app’s user interface.

In Interface Builder, you can add, modify, or remove UI elements, as well as create and manage connections to your code, such as IBOutlet and IBAction connections. You can also customize various properties of the UI elements and set up Auto Layout constraints for responsive layouts.

TED BARNETT:

I am now getting this error: “LifeStory.ViewController 0x103407540> setValue:forUndefinedKey:]: this class is not key value coding-compliant for the key LabelText”

ChatGPT:

- This error is usually caused by an incorrect connection between your Interface Builder (Storyboard or XIB) and the view controller’s code. It looks like there is a connection referencing the key

LabelText, but it’s not defined in yourViewControllerclass.

To resolve this issue, follow these steps:

- Open your Xcode project.

- In the Project Navigator on the left side, click on the

Main.storyboardfile (or the relevant.xibfile). Xcode will open the Interface Builder. - Select the view controller that is causing the problem (in this case, it should be

ViewController). - Open the Connections Inspector in the right panel (the icon looks like a circle with an arrow pointing right).

- Check for any connections with a yellow warning triangle icon, which indicates that the connection is referencing an undefined key or property.

- Remove any connections with a yellow warning triangle by clicking on the “x” button next to the connection.

After removing the problematic connection and ensuring that your connections are set up correctly, the error should be resolved, and your app should run without any issues.

If you still have any issues or can’t find the problematic connection, you may want to double-check the connections between your UI elements and your ViewController class’s code in the Interface Builder. Ensure that any IBOutlet or IBAction references are correctly connected and have the proper names.

TED BARNETT:

what do you mean “have the proper name”?

ChatGPT:

- By “have the proper name,” I mean that the name of the IBOutlet or IBAction in your Interface Builder should match the name of the corresponding property or method in your

ViewControllerclass’s code.

For example, if you have a UILabel in your Interface Builder and an IBOutlet in your ViewController class like this:

@IBOutlet weak var myLabel: UILabel!

The connection in Interface Builder should have the name myLabel, and it should be connected to the UILabel. If the name in Interface Builder does not match the name in your code, you may encounter errors like the one you’re experiencing.

To ensure that the connections have the proper names, follow these steps:

- Open your Xcode project and click on the

Main.storyboardfile (or the relevant.xibfile) to open the Interface Builder. - Select a UI element (e.g., UILabel) that is connected to an IBOutlet or IBAction in your

ViewControllerclass. - Open the Connections Inspector in the right panel (the icon looks like a circle with an arrow pointing right).

- Check the connections listed under “Referencing Outlets” (for IBOutlet) or “Sent Events” (for IBAction). Make sure that the name of the connection matches the name of the corresponding property or method in your

ViewControllerclass’s code.

If you find any discrepancies, you can remove the incorrect connection by clicking on the “x” button next to the connection in the Connections Inspector. Then, create a new connection by clicking on the small circle next to the IBOutlet or IBAction name in the Connections Inspector and dragging it to the correct UI element in Interface Builder.

TED BARNETT:

I don’t see a key called “LabelText” anywhere in my code. How do I find it?

ChatGPT:

- If you can’t find the “LabelText” key in your code, it’s possible that the problematic connection is hidden in your Interface Builder (Storyboard or XIB file). To find and remove the “LabelText” key, follow these steps:

- Open your Xcode project and click on the

Main.storyboardfile (or the relevant.xibfile) to open the Interface Builder. - Click on the view controller that is causing the issue (in this case, it should be

ViewController). - In the right panel, click on the “Identity Inspector” tab (the icon looks like a circle with an “i” inside it).

- Under “User Defined Runtime Attributes,” look for a row with a key named

LabelText. If you find it, select the row and click on the “-” (minus) button at the bottom to remove the row.

If you can’t find a LabelText key in the “User Defined Runtime Attributes” section, try searching for it in the Connections Inspector:

- With the

ViewControllerstill selected, click on the “Connections Inspector” tab in the right panel (the icon looks like a circle with an arrow pointing right). - Check for any connections with a yellow warning triangle icon, which indicates that the connection is referencing an undefined key or property. Look for any connection with the “LabelText” key.

- If you find a connection with the “LabelText” key, remove it by clicking on the “x” button next to the connection.

After removing the problematic “LabelText” key, the error should be resolved, and your app should run without any issues. If you still can’t find the “LabelText” key, double-check all the connections in your Interface Builder and ensure that they are correctly set up.

TED BARNETT:

I do not see a circle with an “i” inside it as described above. Is there another way to open the Identity Inspector?

ChatGPT:

- Apologies for the confusion. The “Identity Inspector” icon has changed in the latest versions of Xcode. I’ll provide you with the updated steps to open the “Identity Inspector” in Xcode:

- Open your Xcode project and click on the

Main.storyboardfile (or the relevant.xibfile) to open the Interface Builder. - Click on the view controller that is causing the issue (in this case, it should be

ViewController). - In the right panel, click on the “Identity Inspector” tab. It is the third tab from the left, with an icon that looks like a small ID card.

Once you have the “Identity Inspector” open, follow the steps in my previous response to locate and remove the “LabelText” key from the “User Defined Runtime Attributes” section.

If you still can’t find the “LabelText” key or are unable to resolve the issue, it might be helpful to look through your storyboard’s source code (XML) to locate the problematic connection:

- In the Project Navigator, right-click (or Control-click) on the

Main.storyboardfile (or the relevant.xibfile). - Select “Open As” and then click on “Source Code” in the context menu.

- In the source code, search for the “LabelText” key by pressing

Cmd + Fand typing “LabelText” into the search bar. - If you find the “LabelText” key, carefully delete the line of code containing it. Make sure not to delete any other parts of the XML structure.

After making these changes, save the file, switch back to Interface Builder by right-clicking on the file and selecting “Open As” > “Interface Builder – Storyboard” (or “Interface Builder – XIB”), and then try running the app again.

TED BARNETT:

The app is now building and launching on my iPhone. However, after I press the button recordButton and speak text into the microphone, it is correctly converted to text, but when I then click the recordButton again to stop the recording, I see the message “Error: Invalid response” displayed in the textView. Does this mean that the OpenAI interface is not set up correctly?

ChatGPT:

- The “Error: Invalid response” message suggests that there might be an issue with the OpenAI API response or the handling of the response in the

sendToOpenAI()function. It’s possible that there’s a problem with the API call, or the response from the OpenAI API might have an unexpected format.

To further diagnose this issue, I recommend adding print statements to your sendToOpenAI() function to get more information about the API call and response:

- Add a print statement before the API call to display the input text:

print("Sending to OpenAI: \(inputText)")

- Add a print statement in the

completionHandlerclosure to display the raw response from the OpenAI API:

print("Raw response from OpenAI: \(String(data: data, encoding: .utf8) ?? "Unable to decode response")")

- Add a print statement in the

catchblock to display any errors that occur during the JSON decoding process:

print("Error decoding JSON: \(error.localizedDescription)")

Update your sendToOpenAI() function to include these print statements, then run your app again and test the functionality. Check the Xcode console (or device logs) to see the printed output, which should give you more information about the issue.

If the API call is successful, you should see the input text being sent to OpenAI and a valid JSON response from the API. If there’s an issue with the API call, you might see an error message in the response or an issue with the JSON decoding. Based on the output, you can further determine what might be causing the problem and make the necessary adjustments to your code.

Additionally, ensure that your API key is set correctly in the API_KEY constant and that you’re using the appropriate API endpoint for the GPT model you want to use.

TED BARNETT:

Which line in my code is the API call to OpenAI?

ChatGPT:

- In your code, the API call to OpenAI is made using the

URLSession.shared.dataTask(with:completionHandler:)method. Here’s the relevant portion of thesendToOpenAI()function:

URLSession.shared.dataTask(with: request) { data, response, error in

// Handle the response here

}.resume()

This method creates a data task that retrieves the contents of the specified URL (the OpenAI API endpoint) and calls the completion handler when the task finishes. The request object contains the necessary information for the API call, such as the URL, HTTP method, headers, and request body.

When the API call completes, the completionHandler closure is executed, and it receives the data, response, and error parameters. Inside this closure, you handle the API response, such as decoding the JSON data and updating the UI with the received information.

TED BARNETT:

Can you provide an updated version of the sendToOpenAI that includes these 3 print statements (with the correct variable names)?

ChatGPT:

- Here’s an updated version of the

sendToOpenAI()function with the three print statements added:

func sendToOpenAI(inputText: String) {

guard let apiKey = ProcessInfo.processInfo.environment["OPENAI_API_KEY"] else {

print("Error: Missing OpenAI API key")

return

}

let headers = [

"Content-Type": "application/json",

"Authorization": "Bearer \(apiKey)"

]

let body: [String: Any] = [

"model": "text-davinci-002",

"prompt": inputText,

"max_tokens": 150,

"n": 1,

"stop": ["\n"],

"temperature": 0.5

]

guard let url = URL(string: "https://api.openai.com/v1/engines/davinci-codex/completions") else {

print("Error: Invalid URL")

return

}

var request = URLRequest(url: url)

request.httpMethod = "POST"

request.allHTTPHeaderFields = headers

request.httpBody = try? JSONSerialization.data(withJSONObject: body, options: [])

print("Sending to OpenAI: \(inputText)")

URLSession.shared.dataTask(with: request) { data, response, error in

if let error = error {

print("Error: \(error.localizedDescription)")

return

}

guard let data = data else {

print("Error: Data is nil")

return

}

print("Raw response from OpenAI: \(String(data: data, encoding: .utf8) ?? "Unable to decode response")")

do {

let decoder = JSONDecoder()

let result = try decoder.decode(OpenAIResponse.self, from: data)

DispatchQueue.main.async {

self.textView.text = self.textView.text + "\nAI: \(result.choices[0].text.trimmingCharacters(in: .whitespacesAndNewlines))"

}

} catch {

print("Error decoding JSON: \(error.localizedDescription)")

DispatchQueue.main.async {

self.textView.text = "Error: Invalid response"

}

}

}.resume()

}

This updated version includes the three print statements for displaying the input text being sent to OpenAI, the raw response from the OpenAI API, and any errors that occur during JSON decoding. After you add this version to your code and run the app, you can check the Xcode console (or device logs) for the printed output. The output should help you diagnose any issues related to the OpenAI API call and response handling.

TED BARNETT:

Is this code in your last response written in Swift, which is what I am using?

ChatGPT:

- Yes, the code provided in my last response is written in Swift, which is the language you are using for your iPhone application. It is an updated version of the

sendToOpenAI()function that includes the three print statements to help you diagnose any issues related to the OpenAI API call and response handling. You can add this function to yourViewControllerclass and run the app to see the printed output in the Xcode console (or device logs).

TED BARNETT:

I replaced sendToOpenAI() with your suggested code above, but now see this error: “Cannot find ‘OpenAIResponse’ in scope” on the line that reads “let result = try decoder.decode(OpenAIResponse.self, from: data)”

ChatGPT:

- Apologies for the oversight. I missed providing you with the

OpenAIResponsestruct, which is required for decoding the JSON response from the OpenAI API. Please add the following struct to yourViewController.swiftfile, preferably outside theViewControllerclass:

struct OpenAIResponse: Codable {

let id: String

let object: String

let created: Int

let model: String

let usage: Usage

let choices: [Choice]

struct Usage: Codable {

let prompt_tokens: Int

let completion_tokens: Int

let total_tokens: Int

}

struct Choice: Codable {

let text: String

let index: Int

let logprobs: JSONNull?

let finish_reason: String

}

}

struct JSONNull: Codable {

init(from decoder: Decoder) throws {

if let _ = try? decoder.singleValueContainer().decodeNil() {

return

}

throw DecodingError.typeMismatch(JSONNull.self, DecodingError.Context(codingPath: decoder.codingPath, debugDescription: "Not a JSON null value"))

}

func encode(to encoder: Encoder) throws {

var container = encoder.singleValueContainer()

try container.encodeNil()

}

}

This struct defines the expected structure of the JSON response from the OpenAI API, which is necessary for decoding the data using the JSONDecoder in the sendToOpenAI() function.

After you add the OpenAIResponse and JSONNull structs to your code, the “Cannot find ‘OpenAIResponse’ in scope” error should be resolved, and you should be able to build and run your app without any issues.

TED BARNETT:

Can I add these struct definitions just before the line that begins “class ViewController…”?

ChatGPT:

- Yes, you can add the

OpenAIResponseandJSONNullstruct definitions just before the line that begins “class ViewController…”. This will make the structs available within the scope of the file, and you can use them in yourViewControllerclass.

Here’s how your code should look:

import UIKit

import Speech

struct OpenAIResponse: Codable {

// ... (rest of the OpenAIResponse struct)

}

struct JSONNull: Codable {

// ... (rest of the JSONNull struct)

}

class ViewController: UIViewController {

// ... (rest of your ViewController class)

}

By adding the structs in this way, you should be able to build and run your app without encountering the “Cannot find ‘OpenAIResponse’ in scope” error.

TED BARNETT:

Now I see a new error. The sendToOpenAI(text: textView.text) call in the function “recordButtonTapped” generates the error “Extra arguments at positions #1, #2 in call”. Do I need to rewrite the fuction “recordButtonTapped”?

ChatGPT:

- Apologies for the confusion. It seems I made an error in a previous response. You should indeed call

sendToOpenAI(inputText: textView.text)instead ofsendToOpenAI(text: textView.text)in therecordButtonTappedfunction.

Here’s the corrected version of the recordButtonTapped function:

@objc func recordButtonTapped() {

if isRecording {

recordButton.setTitle("Start Recording", for: .normal)

isRecording = false

audioEngine.stop()

recognitionRequest?.endAudio()

sendToOpenAI(inputText: textView.text)

} else {

recordButton.setTitle("Stop Recording", for: .normal)

isRecording = true

startRecording()

}

}

With this change, you should no longer encounter the “Extra arguments at positions #1, #2 in call” error, and your app should build and run correctly.

TED BARNETT:

But this was an @IBAction before. Is it now an @objc function?

ChatGPT:

- Apologies for the confusion. If you connected your

recordButtonas anIBAction, you should keep it that way.

Here’s the corrected version of the recordButtonTapped function as an IBAction:

@IBAction func recordButtonTapped(_ sender: UIButton) {

if isRecording {

recordButton.setTitle("Start Recording", for: .normal)

isRecording = false

audioEngine.stop()

recognitionRequest?.endAudio()

sendToOpenAI(inputText: textView.text)

} else {

recordButton.setTitle("Stop Recording", for: .normal)

isRecording = true

startRecording()

}

}

This change should resolve any issues with the function being an IBAction or an @objc function. Your app should now build and run correctly.

TED BARNETT:

But this eliminates the code that appended the user input and AI response to the conversation history. Don’t I need that too?

ChatGPT:

- You’re right, I missed that part. I apologize for the confusion. Here’s the corrected version of the

recordButtonTappedfunction as anIBAction, including the code to append the user input and AI response to the conversation history:

@IBAction func recordButtonTapped(_ sender: UIButton) {

if isRecording {

recordButton.setTitle("Start Recording", for: .normal)

isRecording = false

audioEngine.stop()

recognitionRequest?.endAudio()

if let userText = textView.text {

let userInput = "You: \(userText)"

textView.text = textView.text + "\n" + userInput

}

sendToOpenAI(inputText: textView.text)

} else {

recordButton.setTitle("Stop Recording", for: .normal)

isRecording = true

startRecording()

}

}

With this change, your app should now append the user input and AI response to the conversation history, and build and run correctly.

TED BARNETT:

Ah. The response from OpenAI has the message “Cannot specify both model and engine”. My requestData reads as follows: let requestData: [String: Any] = [

"model": "text-davinci-003",

"prompt": prompt,

"max_tokens": 150,

"n": 1,

"stop": ["\n"],

"temperature": 0.5

]

ChatGPT:

- I apologize for the confusion earlier. It seems I made an error in the API call URL. Since you are using the “text-davinci-003” model, the URL should be constructed using the “davinci-codex” engine. Please update the URL in the

sendToOpenAIfunction to the following:

guard let url = URL(string: "https://api.openai.com/v1/engines/text-davinci-003/completions") else {

print("Error: Invalid URL")

return

}

This change should resolve the “Cannot specify both model and engine” error in the OpenAI API response. Your app should now work correctly with the “text-davinci-003” model.

TED BARNETT:

How can I make sure that the text displayed is bold for the “You: ” text that appears?

ChatGPT:

- To make the “You: ” text bold in the

UITextView, you’ll need to use anNSMutableAttributedStringto apply the bold font style. Here’s an updated version of therecordButtonTappedfunction that appends the bold “You: ” text to the textView:

@IBAction func recordButtonTapped(_ sender: UIButton) {

if isRecording {

recordButton.setTitle("Start Recording", for: .normal)

isRecording = false

audioEngine.stop()

recognitionRequest?.endAudio()

if let userText = textView.text {

let boldFont = UIFont.boldSystemFont(ofSize: textView.font?.pointSize ?? UIFont.systemFontSize)

let regularFont = UIFont.systemFont(ofSize: textView.font?.pointSize ?? UIFont.systemFontSize)

let attributedUserInput = NSMutableAttributedString(string: "You: ", attributes: [NSAttributedString.Key.font: boldFont])

attributedUserInput.append(NSAttributedString(string: userText, attributes: [NSAttributedString.Key.font: regularFont]))

attributedUserInput.append(NSAttributedString(string: "\n"))

let conversationHistory = NSMutableAttributedString(attributedString: textView.attributedText)

conversationHistory.append(attributedUserInput)

textView.attributedText = conversationHistory

}

sendToOpenAI(inputText: textView.text)

} else {

recordButton.setTitle("Stop Recording", for: .normal)

isRecording = true

startRecording()

}

}

In this updated version, we create an NSMutableAttributedString with the bold font for the “You: ” text and the regular font for the user’s input text. We then append this attributed string to the existing conversation history in the textView.

With this change, the “You: ” text should appear bold in the textView.