ChatGPT 4 Xcode with Self-Hosted LLM

This project builds Xcode source editor extension, allows the developer to use chatGPT(openAI) or local LLM for their code.

Features

- Refactor code

- Check code smells (whole file or selected lines)

- Convert json file to swift code

- Add comment to selected function

- Create regex from selected string

- Create unit test for selected function

- Explain code in the file

Architecture

Running OpenAI

Rename .env.example to .env and add your openAI API key (.env file is already added to .gitignore along with .xcconfig files)

Original xcconfig files are in the repo. Avoid any modified version being uploaded. You can do this.

git update-index --assume-unchanged Configurations/*.xcconfig

To revert

git update-index --no-assume-unchanged Configurations/*.xcconfig

NOTE: API key will be copied into Common.xcconfig when running the extension app with ChatGPTExtension scheme with a Shell Script which is defined as pre-actions in the scheme.

Running Self hosted language models

You can also use your local server/LLM with following popular services. They all provide openAI compatible APIs. Just need to update two fields in Common.xcconfig for each of the services

Text Generation WebUI

- Enable flag USE_TEXT_WEB_UI

- Update the URL with your local server. Default: http://127.0.0.1:5000

- Run local server

$ ./start_macos.sh --api(API mode)

LM Studio

- Enable flag USE_LM_STUDIO

- Update the URL with your local server. Default: http://127.0.0.1:1234

- From LLM Studio, select your model at the top, then click “Start Server“.

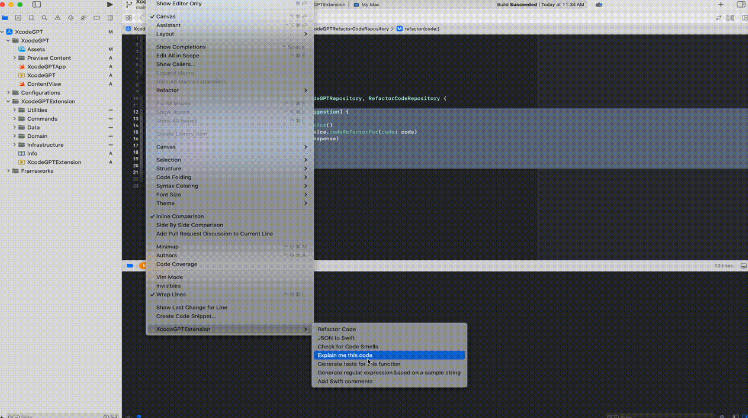

Usage

Make sure you select “ChatGPTExtension” scheme, then select from top menu “Product” -> “Archive“. In Organizer window, select Distribute App, then select Custom, Copy App. Store the app in your local folder.

Open the folder, you should see the mac app ChatGPT. Double click to install. It will create extension in your mac, “System Preference” -> “Extensions“. Now you should be able to use it in any xcode project under “Editor” -> “ChatGPTExtension“

Delete Extension: Just delete the original app from the folder where you installed before.

Debug & Test

Edit ChatGPTExtension scheme and select Xcode.app as executable. When running the app, it will ask you to select a different xcode project, which will open another xcode instance. You can then use extension command directly there. Meanwhile you can see the debug info from original xcode console.

Add new feature

- Define your prompt in Localizable

- Provide command name in Info.plist

- Create your Xcode command (use case, Repository, Command) files

- (Optional) Define shortcut to boost productivity

TODO

- support LiteLLM and other popular Self-Hosted solutions

- support more openAI features like OpenAISwift

Popular Code Models

I used the models with 7B parameters to test which is much slower compared with openAI. Depends on your computer spec, select the one which works best for you. Suggest to use LM Studio to download the models, it will show you if the model is compatible with your machine or not.

Popular Self-hosted Library/Client

llama.cpp The source project for GGUF. Offers a CLI and a server option.

KoboldCpp a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

LoLLMS Web UI a great web UI with many interesting and unique features, including a full model library for easy model selection.

Faraday.dev an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

ctransformers a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

llama-cpp-python a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.