NSFW

Our adult content detection technology scans images and filters out any offensive content that can be considered not suitable or safe for work. The AI-powered NSFW detector recognizes offensive content in real time and helps keep the applications safe and free from improper content.

Features

- Scanning image for Nudity(Hentai, Sexy, Pornographic)

- Scanning image for Drawing and Neutral

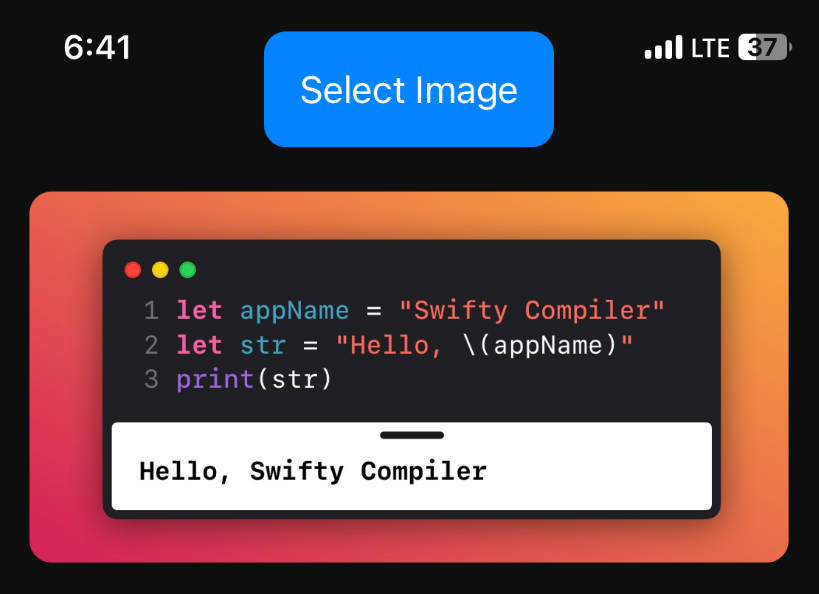

Usage

let detector = Detector()

detector.scan(image: image) { result in

switch result {

case .success(let successResult):

print(successResult.neutral)

print(successResult.drawing)

print(successResult.hentai)

print(successResult.sexy)

print(successResult.pornagraphic)

case .error(let error):

print("Processing failed: \(error.localizedDescription)")

}

}

Requirements

- iOS 13.0+ (if you use only UIKit)

- Swift 4.2+

Installation

CocoaPods

pod 'NSFW'

Carthage

github "smartclick/NSFW"