AAObnoxiousFilter

AAObnoxiousFilter is a profanity filter for images written in CoreML and Swift. Its a lightweight framework that can detect the inappropriation in UIImage with a single line of code.

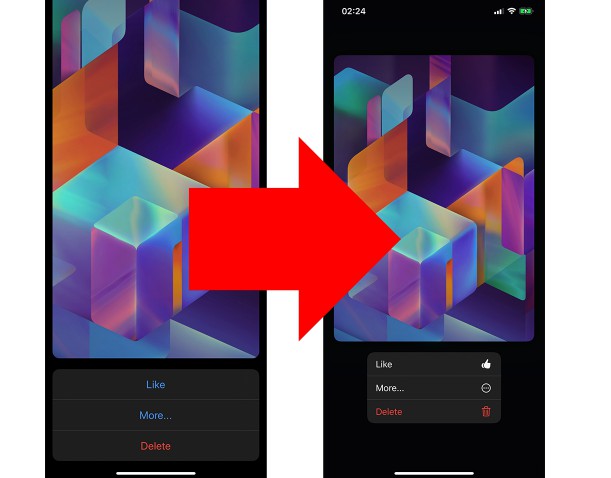

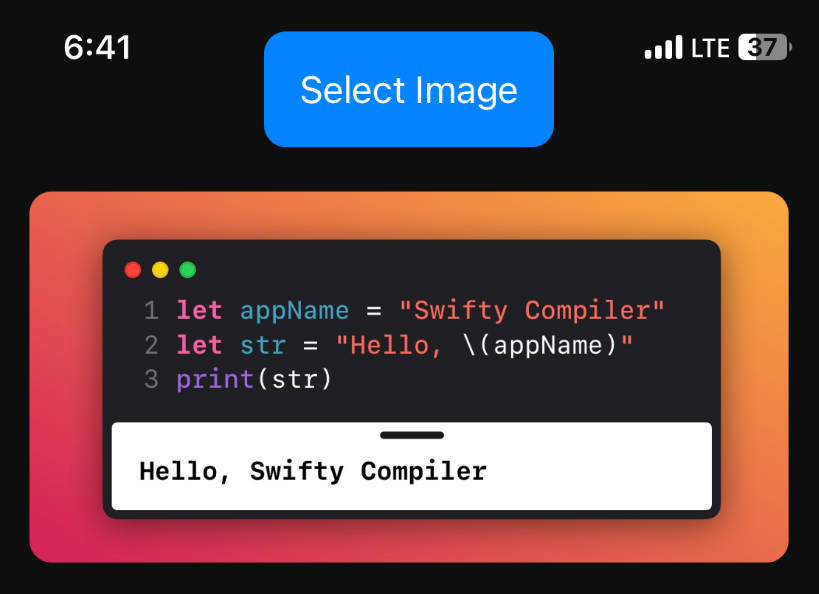

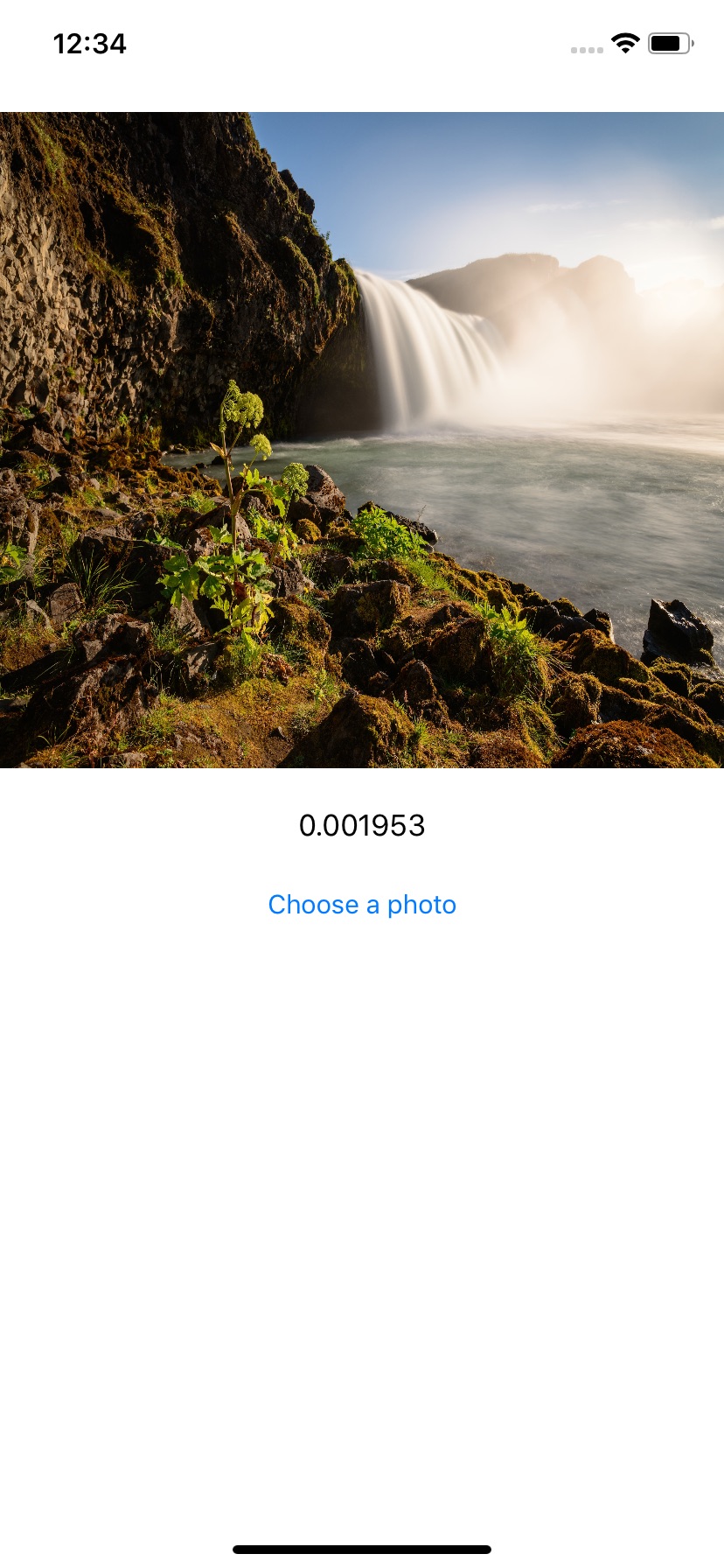

Demonstration

To run the example project, clone the repo, and run pod install from the Example directory first.

Requirements

- iOS 11.0+

- Xcode 10.0+

- Swift 4.2+

Installation

AAObnoxiousFilter can be installed using CocoaPods, Carthage, or manually.

CocoaPods

AAObnoxiousFilter is available through CocoaPods. To install CocoaPods, run:

$ gem install cocoapods

Then create a Podfile with the following contents:

source 'https://github.com/CocoaPods/Specs.git'

platform :ios, '11.0'

use_frameworks!

target '<Your Target Name>' do

pod 'AAObnoxiousFilter'

end

Finally, run the following command to install it:

$ pod install

Carthage

To install Carthage, run (using Homebrew):

$ brew update

$ brew install carthage

Then add the following line to your Cartfile:

github "EngrAhsanAli/AAObnoxiousFilter" "master"

Then import the library in all files where you use it:

import AAObnoxiousFilter

Manual Installation

If you prefer not to use either of the above mentioned dependency managers, you can integrate AAObnoxiousFilter into your project manually by adding the files contained in the Classes folder to your project.

Getting Started

Detect the Image

if let prediction = image.predictImage() {

// prediction if greater than 0.5, the image will have inappropriate look

}

else {

// Exception in any other case if the Image is not valid to predict

}