ConvoKit

Goal:

Make SwiftUI apps more accessible + powerful with conversation

Why:

My Dad has Parkinson’s which can make it difficult to use touch screens, especially when his tremors are worse.

I’ve attempted to develop computer vision based control apps with eye tracking but we find these somewhat inaccurate, this is where ConvoKit can hopefully help.

Further, ConvoKit makes apps easier to use for everyone, but especially for people with intellectual conditions.

Idea:

Use Whisper to transcribe spoken natural language requests and tiny LLMs to understand the context behind the request to decide which swift function to call.

The development experience is as simple as adding a macro to the classes you’d like to expose to the LLM and initializing the framework within your SwiftUI View.

Setup:

@Observable

// Exposes all public functions to ConvoKit

@ConvoAccessible

class ContentViewModel {

var navigationPath = NavigationPath()

public func navigateToHome() {

navigationPath.append(ViewType.home)

}

// Public functions are exposed to ConvoKit

public func printNumber(number: Int) {

print(number)

}

}

// Initializes a view model that can interpret natural language through voice and speak back if you have a backend endpoint

@StateObject var streamer = ConvoStreamer(baseThinkURL: "", baseSpeakURL: "", localWhisperURL: Bundle.main.url(forResource: "ggml-tiny.en", withExtension: "bin")!)

func request(text: String) async {

// A string that holds all options (the functions you marked as public)

let options = #GetConvoState

// LLM decides which function to call here

if let function = await streamer.llmManager.chooseFunction(text: text, options: options) {

callFunctionIfNeeded(functionName: function.functionName, args: function.args)

}

}

func callFunctionIfNeeded(functionName: String, args: [String]) {

// A macro that injects a bunch of if statements to handle the called function

#ConvoConnector

}

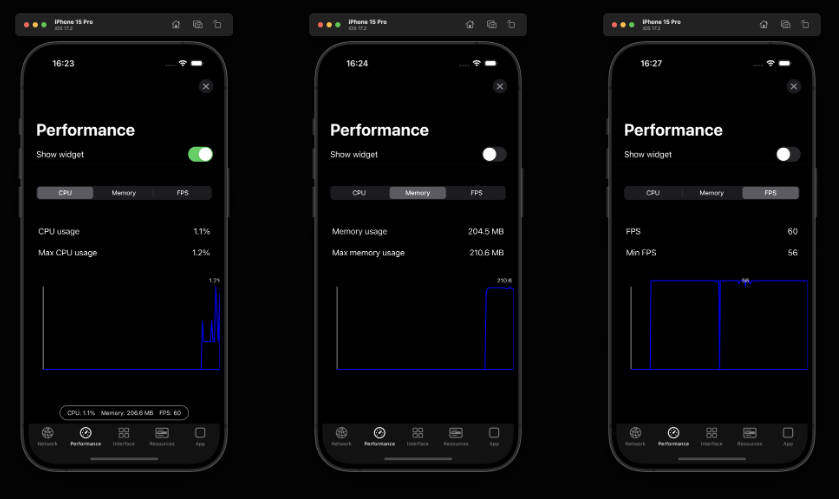

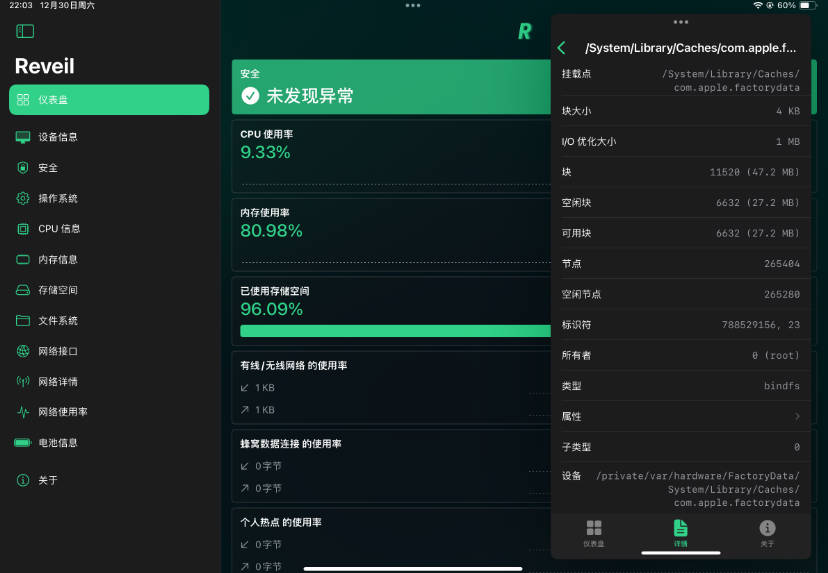

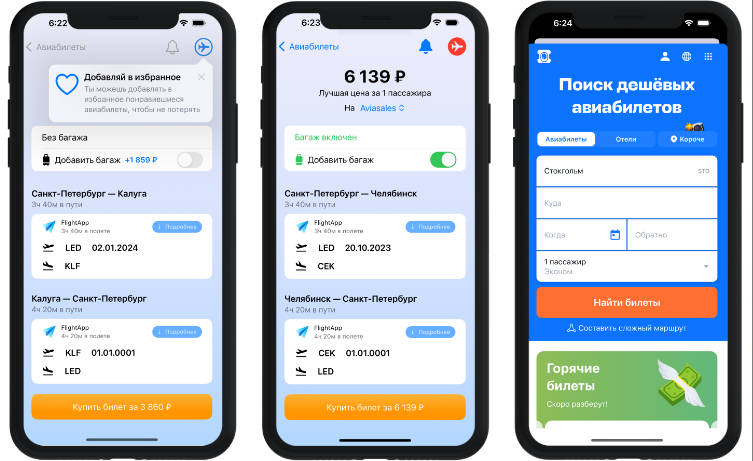

Basic Input + Output:

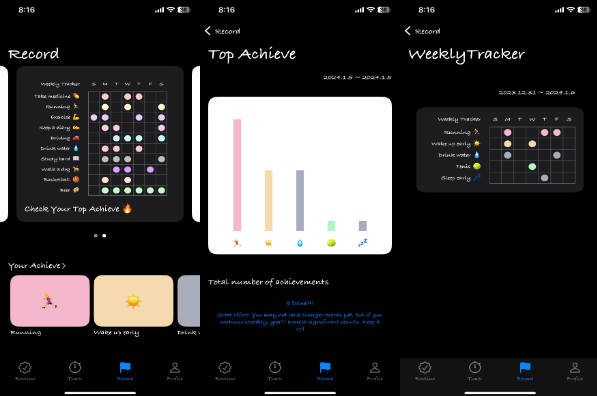

Future Use Cases (ConvoKit isn’t at this complexity yet, but this is the vision for it):

YouTube

- Open YouTube, say “Find a video that is a SQL databases college level course”

- YouTube finds a video based on your spoken words and plays it

Apple Health

- Open Apple Health, say “How was my walking this week compared to last week?”

- Apple health responds with, “Your overall mobility has declined but your step count is up!”

- It then shows graphs explaining this data

Live Simple Example:

RPReplay_Final1704503781.mov