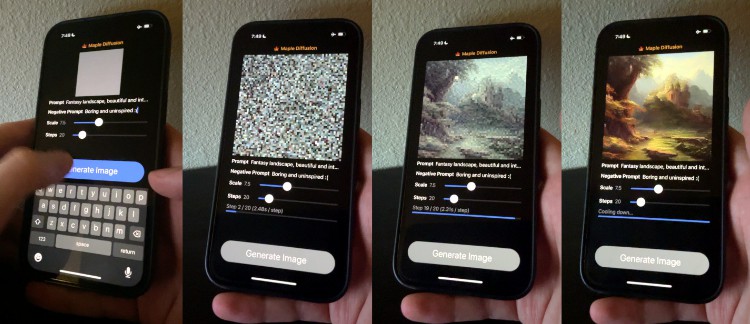

? Maple Diffusion

Maple Diffusion runs Stable Diffusion models locally on macOS / iOS devices, in Swift, using the MPSGraph framework (not Python).

Maple Diffusion should be capable of generating a reasonable image in a minute or two on a recent iPhone (I get around ~2.3s / step on an iPhone 13 Pro).

To attain usable performance without tripping over iOS’s 4GB memory limit, Maple Diffusion relies internally on FP16 (NHWC) tensors, operator fusion from MPSGraph, and a truly pitiable degree of swapping models to device storage.

On macOS, Maple Diffusion uses slightly more memory (~6GB), to reach <1s / step.

Usage

To build and run Maple Diffusion:

-

Download a Stable Diffusion model checkpoint (

sd-v1-4.ckpt, or some derivation thereof) -

Download this repo

git clone https://github.com/madebyollin/maple-diffusion.git && cd maple-diffusion

-

Convert the model into a bunch of fp16 binary blobs. You might need to install PyTorch and stuff.

./maple-convert.py ~/Downloads/sd-v1-4.ckpt -

Open, build, and run the

maple-diffusionXcode project. You might need to set up code signing and stuff