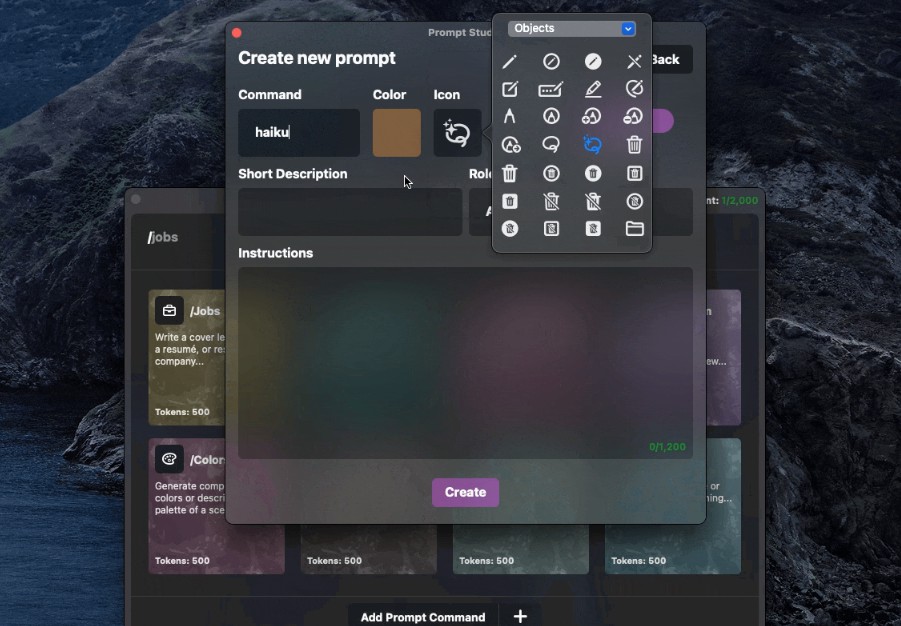

Nea (macOS)

100% SwiftUI GPT/LLM Client with Prompt Engineering support. With Microsoft’s Azure OpenAI functionality.

| General Query View | Prompt Studio |

|---|---|

|

|

Requirements

macOS 12.4+Build passing ?

Setting Up Azure OpenAI

Repo will be updated to include this out of the box, front-end side, in the future.

public static let base = "https://{RESOURCE}.openai.azure.com/"

public static let v1ChatCompletion = "openai/deployments/{DEPLOYMENT_NAME}/chat/completions"

public static let v1Completion = "openai/deployments/{DEPLOYMENT_NAME}/completions"

self.client = ChatGPT(apiKey: apiKey, api: .azure)

Persisting Chat Messages

Repo will be updated to include this out of the box, front-end side, in the future.

Back in SandGPT:

Nea/Services/Sand/Models/SandGPT.swift

Line 48 in 24fbcfb

Simply build a flow to store the type ChatMessage prior to using the Swift Package.

private var messages: [ChatMessage] = []

func ask<E: EventExecutable>(_ prompt: String,

withSystemPrompt systemPrompt: String? = nil,

withConfig config: PromptConfig,

stream: Bool = false,

logMessages: Bool = false,

event: E) {

Append the user prompt with the role user.

self.messages.append(.init(role: .user, content: prompt)) //add user prompt

Append the chat-bot’s response with the role assistant.

reply = try await client.ask(messages: self?.messages ?? [],

withConfig: config)

DispatchQueue.main.async { [weak self] in

self?.isResponding = false

}

if logMessages {

self?.messages.append(.init(role: .assistant, content: reply))

}

event.send(Response(data: reply, isComplete: true, isStream: false))

The role .system is good for setting a persona prior to message collection.

self?.messages.append(.init(role: .system, content: "Act as..."))

Linking the reset() function in SandGPT() to clear messages is helpful too:

func reset(messages: Bool = false) {

self.currentTask?.cancel()

self.reqDebounceTimer?.invalidate()

self.replyCompletedTimer?.invalidate()

self.isResponding = false

if messages {

self.messages = []

}

}

Guide

PaneKit

- Window resizing and size management occurs here.

Declaritevly update a single window’s size whenever an action requires

state.pane?.display {

WindowComponent(WindowComponent.Kind.query)

if addResponse {

WindowComponent(WindowComponent.Kind.divider)

WindowComponent(WindowComponent.Kind.response)

WindowComponent(WindowComponent.Kind.spacer)

WindowComponent(WindowComponent.Kind.shortcutbar)

}

}

InteractionManager

- Popups and Hotkey observation/registration

Example of Using PopupableView to easily trigger popups in any window instance.

PopupableView(.promptStudio,

size: .init(200, 200),

edge: .maxX, {

RoundedRectangle(cornerRadius: 6)

.frame(width: 60, height: 60)

.foregroundColor(Color(hex: promptColor))

}) {

ColorPicker(hex: $promptColor)

}

.frame(width: 60, height: 60)

FAQ

Why chat completions over completions for prompts?

- No specific reason besides, finding it to provide better results for my own needs.

- Completions endpoint capability will be added soon along with a toggle to switch between.

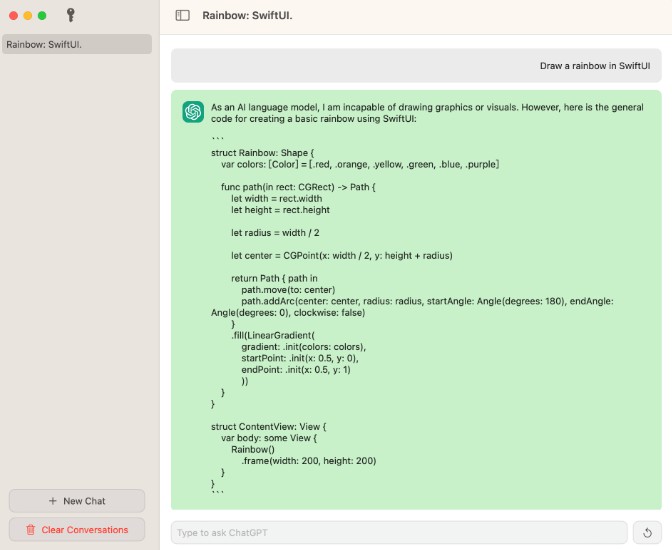

More Previews

| Advanced Tuning | Helper Tab |

|---|---|

|

|

| Using Commands | Switching Commands |

|---|---|

|

|

TODO

- Clean up Azure OpenAI integration in SwiftGPT

- Integrate Azure and chat history properly into main App. Cleanup README after.